In the rapidly evolving digital landscape, businesses are increasingly adopting Generative AI (GenAI) technologies to stay competitive and innovate. Large Language Models (LLMs) like GPT-4 have transformed the way we handle data, automate processes, and interact with customers. However, the utilization of these powerful tools brings forth significant concerns, especially regarding the handling of business-sensitive data and personal information. How can businesses leverage the immense benefits of GenAI without compromising data security and regulatory compliance?

This comprehensive guide explores the challenges associated with using cloud-based LLMs for sensitive data and presents viable solutions through local deployment, dedicated environments, and secure platforms like Amazon Bedrock. We will delve into popular LLMs suitable for secure implementation—such as LLaMA 2 Chat, Falcon LLM, and MPT—and compare different approaches to help you make informed decisions. Additionally, we'll highlight how partnering with experienced software development firms like A-Team Global can ease this transition.

Understanding the Risks: Why Data Security Matters

When businesses utilize cloud-based LLMs, every piece of data inputted is transmitted to external servers for processing. While these providers employ robust security measures, the very act of sending sensitive information over the internet introduces inherent risks.

Data Breaches and Cyber Threats

No digital system is entirely immune to hacking. High-profile data breaches have impacted even the most secure platforms, leading to significant financial losses and reputational damage. The risk is amplified when sensitive business information or personal data is involved.

Regulatory Compliance Challenges

Regulations such as the General Data Protection Regulation (GDPR), the Health Insurance Portability and Accountability Act (HIPAA), and other data protection laws impose strict guidelines on how and where data can be stored and processed. Non-compliance can result in hefty fines and legal repercussions.

Loss of Control Over Data

Once data leaves your secure network, control over its usage diminishes. There is limited oversight on how third-party providers handle, store, or potentially share your data, raising concerns about confidentiality and intellectual property protection.

Identifying Sensitive Data: What Needs Protection?

Before implementing solutions, it's crucial to recognize the types of data that require stringent protection.

Business-Sensitive Data

This includes proprietary information such as financial records, trade secrets, strategic business plans, and intellectual property. Unauthorized access to this data can undermine competitive advantages and affect market positioning.

Personal Data

Personal data encompasses any information related to identifiable individuals, such as employees or customers. This includes names, addresses, contact details, and more sensitive information like social security numbers or health records.

Protecting this data is not just about compliance; it's about maintaining trust with your stakeholders and upholding your company's reputation.

The Solution: Local Deployment, Dedicated Environments, and Secure Platforms

To mitigate these risks, businesses can opt for deploying LLMs locally, within dedicated environments, or using secure platforms like Amazon Bedrock. These approaches ensure that sensitive data remains within the confines of your secure infrastructure.

Local Deployment of LLMs

Running LLMs on-premises means that all data processing occurs within your organization's own servers. This setup provides complete control over data flow and storage, significantly reducing the risk of external breaches.

- Enhanced Security: With local deployment, you have direct oversight of security protocols, access controls, and monitoring systems.

- Compliance Assurance: Keeping data in-house simplifies adherence to data protection regulations.

- Customization Opportunities: Local models can be tailored to meet specific business needs without the limitations imposed by external providers.

Dedicated Environments

Alternatively, businesses can use private clouds or isolated servers provided by trusted vendors. These environments offer a middle ground between local deployment and cloud services.

- Scalability: Dedicated environments can be scaled according to business needs without compromising security.

- Managed Services: Vendors can provide maintenance and updates, easing the technical burden on your IT team.

- Isolation: Data is kept separate from other clients, reducing the risk of cross-contamination or unauthorized access.

Using Secure Platforms like Amazon Bedrock

Amazon Bedrock is a fully managed service from AWS that provides access to foundational AI models. It allows businesses to build and scale generative AI applications securely.

Key Benefits:

- Secure Environment: Amazon Bedrock operates within the robust security framework of AWS, providing enterprise-grade security features.

- Data Privacy: Your data remains within your AWS environment, and AWS does not use your content to train their models, ensuring data confidentiality.

- Scalability and Flexibility: Easily scale your AI applications without managing underlying infrastructure.

- Compliance Support: AWS provides tools and resources to help you meet various compliance standards.

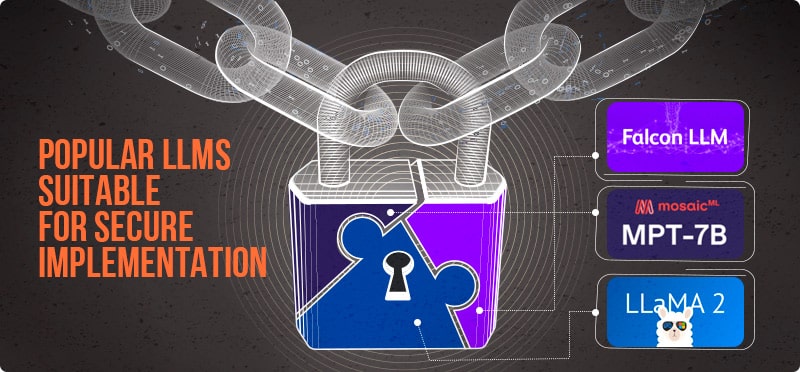

Popular LLMs Suitable for Secure Implementation

Several LLMs are designed to be deployed locally or within dedicated environments, making them ideal for businesses prioritizing data security. Let's delve deeper into some of the most prominent models.

LLaMA 2 Chat

Overview: Developed by Meta AI, LLaMA 2 Chat is an advanced open-source language model fine-tuned for conversational applications. Building upon the success of its predecessor, LLaMA 2 offers enhanced performance and versatility.

Key Features:

- Scalable Model Sizes: Available in 7B, 13B, and 70B parameter versions, allowing businesses to choose based on computational resources.

- Fine-Tuned for Dialogue: Specifically trained for chat applications, making it adept at understanding and generating human-like conversational responses.

- Open-Source License: Allows for commercial use under the LLaMA 2 Community License Agreement, providing flexibility for businesses.

Advantages:

- Local Deployment: Can be run on-premises, ensuring that sensitive data remains within your secure infrastructure.

- Customization: Easily fine-tuned with your own data to better suit specific business needs.

- Cost-Effective: Being open-source, it reduces licensing costs associated with proprietary models.

Falcon LLM (Falcon 7B-Instruct and Falcon 40B-Instruct)

Overview: Falcon LLM is a series of state-of-the-art language models developed by the Technology Innovation Institute in the UAE. The Falcon 7B-Instruct and Falcon 40B-Instruct are instruction-tuned versions optimized for following human instructions.

Key Features:

- High Performance: Falcon models have achieved top rankings on various AI benchmarks for their parameter sizes.

- Instruction Tuning: Specifically designed to understand and execute user instructions, making them ideal for a wide range of applications.

- Open-Source Accessibility: Released under the Apache 2.0 license, facilitating commercial use and modification.

Advantages:

- Flexible Deployment: Can be implemented locally or within a dedicated environment, offering control over data privacy.

- Multilingual Support: Capable of understanding and generating text in multiple languages, beneficial for global businesses.

- Community and Support: Backed by an active community, providing resources and shared improvements.

MPT (MosaicML Pretrained Transformer)

Overview: MPT, developed by MosaicML, is a family of transformer models designed for efficient training and deployment. Models like MPT-7B and its variants are optimized for various tasks, including instruction following and chat applications.

Key Features:

- Efficient Architecture: Designed for high-speed training and inference, reducing computational costs.

- Variety of Models: Offers specialized versions such as MPT-7B-Instruct and MPT-7B-Chat for different applications.

- Permissive Licensing: Available under the Apache 2.0 license, encouraging commercial use and customization.

Advantages:

- Scalable Solutions: Suitable for businesses of all sizes due to its efficiency and flexibility.

- Local Deployment Ready: Can be deployed on-premises, ensuring data stays within your control.

- Customization and Fine-Tuning: Easily adaptable to specific domains or tasks through fine-tuning with proprietary data.

Amazon Bedrock

Overview: Amazon Bedrock is a managed service that provides access to foundational AI models from leading AI companies, including Amazon's own Titan models, through a scalable, secure, and fully managed AWS service.

Key Features:

- Access to Multiple Models: Offers a variety of foundational models, including those optimized for specific tasks like text generation, summarization, and chatbots.

- Integration with AWS Services: Seamlessly integrates with other AWS services, facilitating a unified workflow.

- No Data Retention for Model Training: AWS does not retain or use your data for training the underlying models, ensuring data privacy.

Advantages:

- Secure Environment: Leverages AWS's robust security infrastructure, including encryption at rest and in transit.

- Scalability: Automatically scales to meet your application's demands without the need to manage infrastructure.

- Compliance and Governance: AWS provides comprehensive compliance certifications and attestations, aiding in regulatory compliance.

- Ease of Use: Simplifies the process of deploying AI applications, reducing time to market.

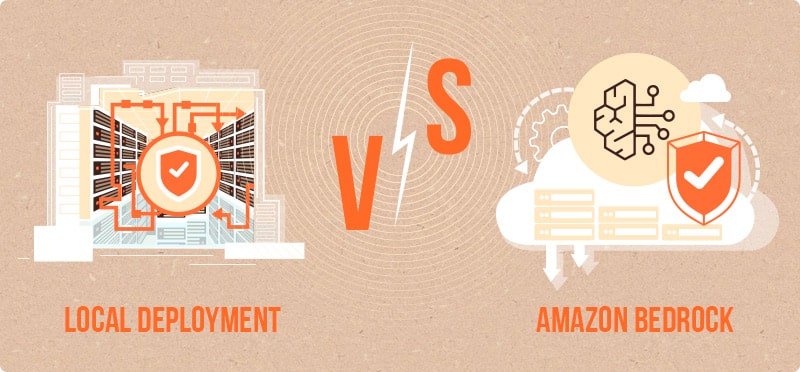

Comparing Approaches: Local Deployment vs. Amazon Bedrock

When deciding between local deployment and using a secure platform like Amazon Bedrock, several factors come into play.

Data Control and Security

- Local Deployment: Offers maximum control over data as everything is contained within your own infrastructure. Ideal for organizations with strict data sovereignty requirements.

- Amazon Bedrock: Provides a secure environment within AWS's infrastructure. While data is processed within your AWS account, it still involves transferring data to the cloud, which may be a concern for some businesses.

Scalability

- Local Deployment: Scalability depends on your hardware capabilities. Scaling up may require significant investment in infrastructure.

- Amazon Bedrock: Offers virtually unlimited scalability, automatically adjusting resources to meet demand without additional infrastructure investment.

Cost Considerations

- Local Deployment: Involves upfront costs for hardware, maintenance, and personnel. Over time, this can be cost-effective for large-scale operations.

- Amazon Bedrock: Operates on a pay-as-you-go model, reducing upfront costs but potentially increasing expenses with high usage.

Compliance and Certification

- Local Deployment: Compliance is managed internally, which can be advantageous if you have a dedicated compliance team.

- Amazon Bedrock: AWS provides a range of compliance certifications (e.g., ISO, SOC, GDPR), simplifying the process of meeting regulatory requirements.

Customization and Control

- Local Deployment: Allows for extensive customization of models and systems, ideal for specialized applications.

- Amazon Bedrock: While offering some customization, it may not match the level of control provided by local deployment.

Time to Implementation

- Local Deployment: May require significant time for setup, configuration, and testing.

- Amazon Bedrock: Enables quicker deployment with managed services, reducing time to market.

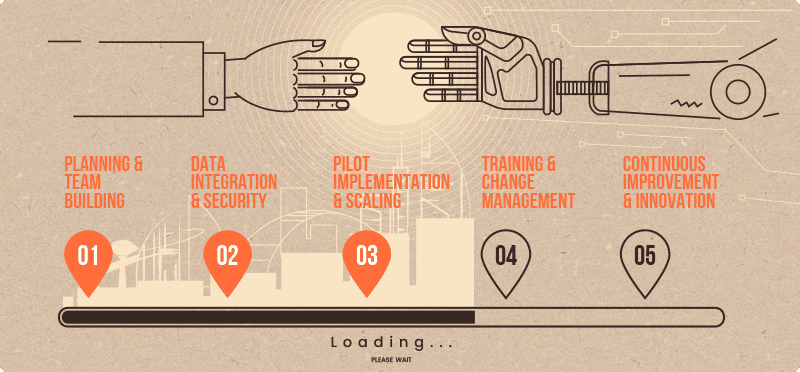

Implementing Secure LLM Solutions: A Step-by-Step Guide

Transitioning to a secure LLM deployment requires careful planning and execution. Here’s how businesses can approach this process:

Assessing Business Needs

Identify the specific tasks the LLM will perform and the type of data it will handle. Understanding your requirements helps in selecting the appropriate model and deployment strategy.

Choosing the Right Approach

Decide between local deployment, dedicated environments, or using a platform like Amazon Bedrock based on:

- Data Sensitivity: For highly sensitive data, local deployment might be preferable.

- Resource Availability: Consider your organization's capacity to manage infrastructure and security.

- Scalability Needs: If expecting rapid growth, a scalable platform like Amazon Bedrock may be beneficial.

- Compliance Requirements: Evaluate which approach better aligns with regulatory obligations.

Preparing Infrastructure

Ensure that your hardware and network systems can support the chosen deployment method.

- For Local Deployment: Invest in robust servers, security systems, and technical expertise.

- For Amazon Bedrock: Set up your AWS environment with appropriate security configurations.

Fine-Tuning the Model

Customize the LLM using your data to improve its performance on specific tasks.

- Local Models: Fine-tuning involves training the model within your infrastructure.

- Amazon Bedrock: Allows for fine-tuning models using your data without the data leaving your AWS environment.

Ongoing Monitoring and Maintenance

Regularly update the model and monitor its performance.

- Local Deployment: Requires internal teams to manage updates and security patches.

- Amazon Bedrock: AWS handles infrastructure maintenance, but you are responsible for your application and data security within the AWS environment.

Leveraging Expert Partnerships for Seamless Integration

Implementing secure LLM solutions can be complex, especially without extensive in-house AI expertise. Partnering with experienced software development firms can simplify this process.

A-Team Global: Your Partner in Secure AI Deployment

At A-Team Global, we specialize in custom software development and have extensive experience in AI technologies. Our team can assist you in:

- Strategic Planning: We help you assess your needs and develop a roadmap for AI integration.

- Infrastructure Setup: Our experts ensure that your systems are ready to support LLM deployment, focusing on security and efficiency.

- Model Customization: We tailor the LLM to your specific business requirements, enhancing its effectiveness.

- Deployment Assistance: Whether you choose local deployment or platforms like Amazon Bedrock, we guide you through the process.

- Training and Support: We provide training for your staff and ongoing support to ensure smooth operation.

Our commitment to excellence and customer satisfaction has made us a trusted partner for businesses looking to innovate while safeguarding their data. Learn more about our services at A-Team Global Software Development Outsourcing.

Balancing Innovation with Compliance

Adopting GenAI technologies doesn't necessitate compromising on data security or regulatory compliance. By choosing the appropriate deployment strategy, businesses can harness the power of AI while maintaining full control over their sensitive information.

Staying Competitive

AI capabilities can significantly improve operational efficiency, customer engagement, and decision-making processes. By implementing secure AI solutions, businesses can stay ahead in a competitive market.

Maintaining Trust

Protecting customer and employee data is crucial for building and maintaining trust. Demonstrating a commitment to data security enhances your brand reputation and fosters loyalty.

Ensuring Regulatory Compliance

Selecting the right deployment approach simplifies adherence to data protection laws, reducing the risk of legal issues and fines. Whether through local deployment or compliant cloud services like Amazon Bedrock, businesses can operate confidently within regulatory frameworks.

Case Studies: Success Stories in Secure AI Implementation

Enhancing Customer Service with AI

A mid-sized retail company sought to improve its customer service through AI-driven chatbots but was concerned about data privacy. By deploying LLaMA 2 Chat locally with the assistance of A-Team Global, they successfully implemented a secure chatbot that enhanced customer interactions without exposing sensitive data externally.

Streamlining Internal Processes with Amazon Bedrock

A healthcare organization needed to process patient data securely while complying with HIPAA regulations. They chose Amazon Bedrock for its secure, compliant environment. A-Team Global assisted in integrating Bedrock into their workflows, enabling efficient data processing while ensuring patient confidentiality.

Scaling AI Solutions in Finance

A financial services firm wanted to automate document analysis using AI but required scalability and compliance with strict regulations. By leveraging Amazon Bedrock, they accessed powerful AI models without managing infrastructure.

A-Team Global customized the solution to meet their specific needs, resulting in improved efficiency and regulatory compliance.

Conclusion

The future of business lies in effectively harnessing the power of AI technologies like LLMs. For organizations handling sensitive data, options like local deployment, dedicated environments, and secure platforms such as Amazon Bedrock offer viable paths forward. These approaches combine the transformative potential of GenAI with robust security measures necessary to protect your most valuable assets.

By carefully selecting the right models—such as LLaMA 2 Chat, Falcon LLM, MPT, or services like Amazon Bedrock—and deployment strategies, and partnering with experienced professionals like A-Team Global, you can unlock new opportunities for growth and efficiency. All this while keeping your data exactly where it belongs: safe and under your control.

you may also want to read

Boost Efficiency Today: Easy AI Integration for Immediate Results

In the past, the idea of integrating artificial intelligence into your business might have felt like venturing into uncharted territory—complex,...

A Roadmap to Gen AI Adoption for Small and Medium Businesses

Unlock new opportunities by integrating Generative AI into your business operations. In today’s fast-paced digital landscape, small and medium businesses...

Hire Laravel Framework Developer: Tips and Offers

Do you dream of a dynamic website, a secure eCommerce store, or a new game but lack the resources for...